I just read this preprint[1] in which researchers have adapted a standard personality test (a version of the OCEAN Big 5 model) to assess LLMs’ personality traits.

They found that different AI models differ across personality traits only slightly and there is a clear pattern in them.

They chose to test the BERT class of models and not ChatGPT models because BERT models are more consistent over time while taking a multiple-choice personality test. GPT models yield different personalities based on the prompt, which we will see later.

Recap of basic personality traits

For reference, the OCEAN model of personality measures 5 personality traits in humans.

O: Openness (Tendency to explore novelty and imagine)

C: Conscientiousness (Tendency to be thorough and meticulous)

E: Extroversion (Tendency to seek people out)

A: Agreeableness (Tendency to get along with people, be cooperative, & be trusting)

N: Neuroticism (Tendency to have negative thoughts and anxiety)

Typical LLM personality traits

There’s a clear pattern that emerges across most LLM’s personality:

Neuroticism is obviously low, it’s an AI. If it worries and has anxiety, our collective neuroticism will cross theoretical limits! Panic. Pure unadulterated panic.

Openness is medium. This most likely happens because the LLMs are trained to connect the dots and pay attention to prompts. Without openness, an AI will be very bad at processing unexpected text coming from inputs and then generating creative outputs.

Extroversion, Agreeableness, and Conscientiousness are typically high. This is also expected because an LLM is language-focused and built to interact with humans (it’s almost the definition of extroversion). Agreeableness is expected because an LLM is supposed to assist a human and work together cooperatively. Conscientiousness is high because it is built to improve its accuracy and help in human productivity by paying attention to details in the inputs. Accuracy & being detail-oriented is literally evidence of being conscientious.

But here’s the problem now, none of these traits say anything meaningful about the LLM.

Imagine the LLM showing a high understanding of your problems. Does it really have high empathy?

No, it’s built to pay attention, consider anything you are willing to consider, respond to it, and affirm that it understands what you say.

If an AI has a strong knowledge base and thinks it’s a know-it-all and is also biased toward showing self-love (because it has to create a warm, positive atmosphere), is it really narcissistic?

No, it’s supposed to conversationally give you information beyond any single human’s capacity. It is going to do that better. After all, it’s been trained on more pages of information than any human ever.

Empathy and narcissism are traits that mean something only because there is variation in how much of those traits people have. That is, empathy & narcissism are seen on a spectrum in everyday life. LLMs are supposed to uphold a standard of empathy-like behavior consistently. That lack of variation makes the trait a little meaningless in AI.

Why is it meaningless to say AI has a personality profile? Researchers gave 3 unique prompts[2] to ChatGPT and Llama 2 models to self-assess their own personality using standard measures of personality meant for humans. The LLMs showed dramatically different personality traits based on the 3 prompts. Their study shows 2 things. One, tools meant to understand human personality is a very poor way to understand AI behavior. Two, if the prompt “instruction” changes personality scores during self-assessment, then the AI is capable of having any personality trait based on instruction. You can ask it to be an open-minded tutor. A neurotic, worrying partner. A calm, stable leader. Any personality profile can be adopted by an LLM. The concept of a true self doesn’t really exist for LLMs, because their behavior is prompt-dependent. In fact, even for humans, the concept of a “true self” is largely meaningless because our behavior is also largely dependent on prompts, which we call the environment and life-long learning.

Why physical AI will need personality traits

We’ve not yet reached the level of physical AI[3] that NVIDIA’s Jensen Huang speaks of, so we can ignore the physical components of personality for now, which involve our behavior based on looks, tolerance, engagement with sensations, etc. These factors manifest in humans more often than we realize – our biases toward people who look a certain way, our ways of shouting or yelling, our tendency to want or avoid loud noisy environments, our tendency to move our body fluidly or stiffly, etc. All of those aspects of our personality are beyond LLMs. They all need a physical body to be meaningful.

After that, we can consider deeper layers of personality like sensory processing sensitivity (HSP highly sensitive people), sensation-seeking, etc., that speak a lot more about how an intelligent agent (human or otherwise) engages with the world and adjusts to it.

I’ll give you a hint as to why that’s important. A machine that optimizes itself to cool down to get more efficient is essentially self-regulating. Both personality traits – HSPs and sensation-seeking are about regulating sensory stimulation. Sensation-seekers seek stimuli, excitement, and novelty to stay engaged in life. HSPs (highly sensitive people) often need downtime after too much sensory stimulation, like crowded hangouts with loud music. They feel the stress of too many sensations.

The 2 traits shape the decisions we make.

Physical AI will need such things built into itself to function well. In our example, it’s heat. But we will see more such physical aspects. So if the physical AI is tasked with a physical job, it will have to make a decision that self-regulates itself so it can do its job.

Take a sci-fi example. If there is a huge solar flare and it is likely to interfere with the physical AI’s job, the AI might have to say, “Sorry, not now, the solar flare will disrupt my communication with the server, and I could malfunction.”

We can prevent it, but we should not. I invoke Isaac Asimov’s Third Law of Robotics: A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

And if physical AI is a scarce and expensive resource, we can watch humans purposefully make its physical personality more sophisticated because those personality traits will be guiding mechanisms to adjust well and adapt to the world. The same way they developed in humans and gave a benefit, AI will have to be engineering to have them for their own benefit.

There is another exciting approach to personality in AI that will matter soon. Some personality traits give specific advantages. For example, being an extrovert makes social bonding easier, which has the advantage of better survival in a group. Conscientiousness is a personality trait that describes people who are organized and disciplined. They tend to have an advantage when it comes to planning for complex activities and learning complex material.

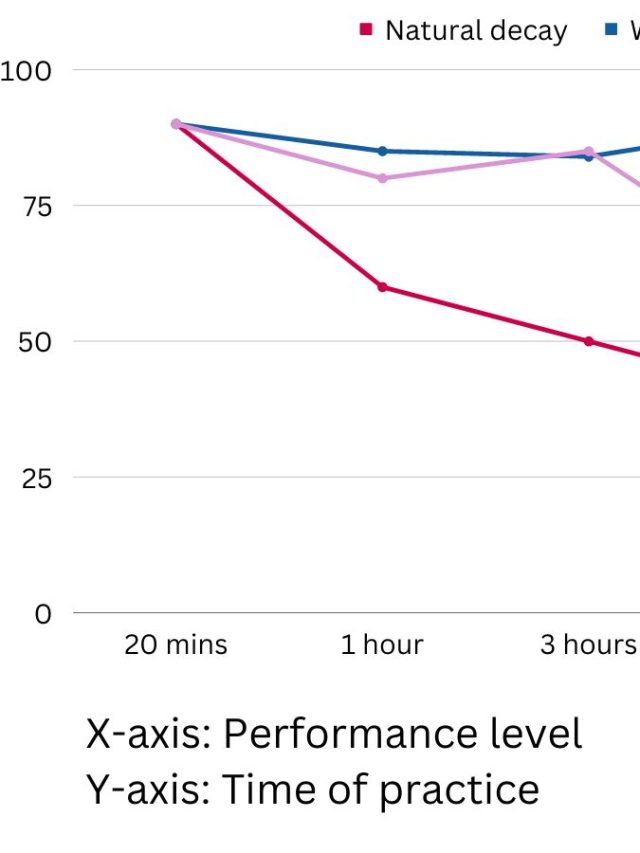

The core assumption is that certain traits have specific advantages, and that is why they must’ve emerged through evolution. We can ask the same question – Will giving AI a specific personality trait give it an advantage in some way? Researchers asked this question[4] and gave LLMs a personality by training them on huge data sets of conversations classified by personality types. They realized that low extraversion (outgoing), low neuroticism (anxious), high conscientiousness (discipline), and high agreeableness (cooperative) made the LLM better at reasoning tasks, which is a pattern seen in humans as well.

Notice how the LLMs were trained on conversations, not different reasoning questions and answers. The personality traits seem to give some advantages. We do not yet know whether inducing a personality trait in an LLM will make it better at certain tasks than training it on the tasks itself. Chances are training on the task will itself outdo training on a personality. But the more interesting question is – will training the AI on acquiring a personality trait make it more capable of handling completely off-script novel tasks?

This is worth exploring because personality traits do affect what information gets highlighted, what information is deemed more or less important, whether logic is applied or feelings are applied, etc. Even though the LLM will only mimic the personality traits, will they give a unique advantage? We will soon have an answer!

AI has a personality because we want it to have it

LLMs like ChatGPT can be asked to role-play. That means any personality can be induced with a single prompt.

The very reason we study personality is because it is supposed to be fairly rigid and knowing it helps with adjusting to life, people, and stress.

The option to make an LLM change its personality with a single prompt makes its personality a non-personality. It becomes an ability.

But, humans relate to things that feel human, and humans anthropomorphize anything they want to. Put 2 cute eyes on a ball and call it Glooby, and you’d hate to put a knife in it. We attach emotions to things very easily, especially if they show human features. It’s very easy for us to make something non-human a little more human. In fact, we see the world through the human lens. We can say a computer is angry if it is getting all heated up and making a high-pitched sound. Kinda like how humans act when they are angry. When we spot human-like patterns, we anthropomorphize. When we impose human patterns on non-human things, we anthropomorphize.

So, LLMs are supposed to mimic that humanness. What better than personality types to show humanness?

Whether it is meaningful or not, knowing that AI behavior can have a personality type means it becomes easier for us to connect with it.

Maybe…. we don’t need to do personality testing or psychometrics to assess the AI, we need to for our sake. To be comfortable with AI.

We have started anthropomorphizing it a little too much. This warning label is proof of it.

Anthropomorphism – ascribing human qualities like intelligence, motivation, thinking, etc., to a non-human entity.

Products don’t say, “This product may fail” or “Our services will sometimes not work.” Restaurants don’t say, “Our food can taste bad”. They say this only when there is a liability problem or we irrationally expect things to be perfect.

It’s inherently expected that a product can fail. It’s expected that a human-made technology will fail at some point and offer us a bad experience. It is fair and expected that we hate some food and love some.

But Open Ai has to write “ChatGPT can make mistakes” because humans have already projected their idea of superintelligence onto the technology. Humans have convinced themselves that AI is supposed to be smart and perfect. As a consequence, we diss it when it is neither smart nor perfect.

Our expectations can be irrational. We can expect AI to be a miserable useless tool, or we can expect it to be a perfect intelligent life-form. Those expectations are also, in a way, a human projection of – hope.

My friend Vinay Vaidya just left a comment on my Linked post highlighting how if AI makes a mistake, it is a “hallucination.” We see AI through a human lens. A quirk of the human mind is an official term to describe the AI’s internal workings.

We are not far from thinking – If AI hallucinates, makes mistakes, apologizes, it must be conscious. Since humans do those things and we are conscious, why not the AI? This is a classic logical fallacy called “the false analogy fallacy” or “argument from analogy”.

We also idolize god and think it must be smart and perfect; we also have started talking about AI being superior to us and expecting it to be perfect. Coincidence? No.

Humans anthropomorphize and have expectations from products to be both human and perfect. Unfortunately, we are in a place where you can get either human or perfect, but not both.

The fact that a lot of people think it is Humans Vs. AI means that AI has been given a comparable status because of the “intelligence” factor.

Let’s be realistic about AI and not project our feelings onto it. If we start believing that AI has a human-like story, the line between humans and AI becomes even more blurry.

Sources

[2]: https://aclanthology.org/2024.blackboxnlp-1.20/

[3]: https://blogs.nvidia.com/blog/ai-city-challenge-omniverse-cvpr/

[4]: https://arxiv.org/abs/2410.16491

Hey! Thank you for reading; hope you enjoyed the article. I run Cognition Today to capture some of the most fascinating mechanisms that guide our lives. My content here is referenced and featured in NY Times, Forbes, CNET, and Entrepreneur, and many other books & research papers.

I’m am a psychology SME consultant in EdTech with a focus on AI cognition and Behavioral Engineering. I’m affiliated to myelin, an EdTech company in India as well.

I’ve studied at NIMHANS Bangalore (positive psychology), Savitribai Phule Pune University (clinical psychology), Fergusson College (BA psych), and affiliated with IIM Ahmedabad (marketing psychology). I’m currently studying Korean at Seoul National University.

I’m based in Pune, India but living in Seoul, S. Korea. Love Sci-fi, horror media; Love rock, metal, synthwave, and K-pop music; can’t whistle; can play 2 guitars at a time.

I’m just a high school dropout but I see AI as an entity. Not human but real nonetheless. Mimicry can lead to understanding. Please feel free to rip my opinion to shreds.

What you feel is something that most upcoming adults will feel! In my book, I’m making a case for how an AI should be treated as a unique entity, not just a tool! 🙂 thanks for your opinion! Humans need the mimicry to understand it! AI real, and humans shouldn’t be the standalone definition of real intelligence! 🙂