I’ve been thinking about memes. 2 years ago, I called meme templates, meme phrases, and reel content outsourced cognition – borrowing others’ structured thoughts. Using phrases like “using my last 2 brain cells” or sharing the Drake meme template with new captions is a standardized way to think in everyday conversation. We don’t even have to make a new sentence and yet communicate intelligently by picking one of the million catchy statements & visuals.

We are literally… divided by nations, united by thoughts when it comes to memes.

This is a highly speculative article, so please indulge my imagination with me.

ELI5 summary

We need to care about the training data going into all of these conversational AI tools we use (ChatGPT). What happens when AI gets trained on low-quality AI-generated content? Model collapse.

Garbage in – Garbage out.

The AI model becomes worse after training. This is a real fear researchers talk about. It defeats the purpose of having an intelligent assistant.

But there is a larger problem than this. What happens when WE keep consuming AI-generated content? Our standards drop – this is the AI symbiotic crisis (we are codependent with AI). Then we keep outsourcing our thinking and actions to AI, and our skills drop. Then, we become more dependent on AI.

It’s like when you cook and the first time it’s good, but over time, you stop caring about the right ingredients and the whole thing becomes a mess. But if you get used to it, your expectation of taste itself goes down. You get comfortable with lower-quality food. Then the trashiest food with some extra salt gives you satisfaction.

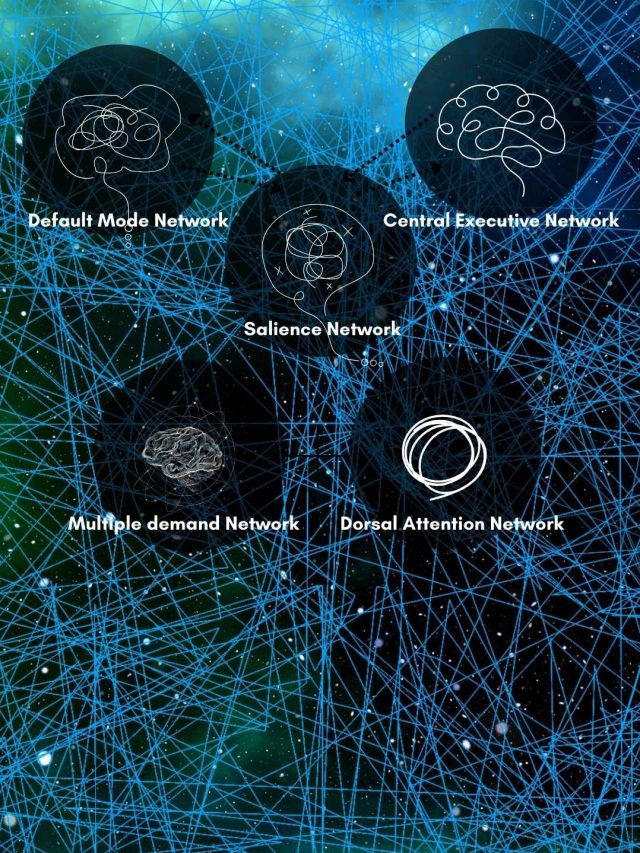

Energy-saving in the brain

From the energy cost point of view about thinking, using a template in the form of a meme is energy efficient. Thinking in memes works. We’ve put the burden of thinking on shared ideas that are readily available to us.

The energy cost of thinking is high. High mental activity[1] depletes glucose levels. Willpower[2] is also limited by glucose supply. The energy cost of running the brain itself is very high – 20% of the body’s oxygen is consumed by the brain. That’s roughly 1.3kgs (3 pounds) out of the typical 70kgs (150 pounds) of the body takes up 20%. That’s 2% of your body taking up 20% oxygen. Within this high oxygen usage by the brain[3], 50% is used by neural activity in everyday life, and 50% is used by the glial cells that maintain the brain itself. In short, the brain is an extremely high energy-consuming organ.

As a result, we’ve developed many, many different energy-efficient mechanisms to use the brain. The most significant and impactful ones are heuristics & biases. These are decision-making shortcuts that grossly simplify our perception and make it easy to do something using only a small bit of information. E.g., buying a laptop can be extremely time-consuming and energy-consuming considering all the specs and features. But relying just on popularity (as a heuristic), our brain is biased in favor of the laptop using only its popularity and overall rating. This simplifies the decision. (if you want to see a comparison of biases between humans and AI, check this out).

Memes are similar. They reduce the effort of conversation by using standardized templates to express thoughts and emotions. And the proof of this enigma lies in the overwhelming meme-exchange between people on social media.

The whole reason we make decisions using heuristics is – it is quick, economical, and usually less mental processing. That’s why thumbnails and titles matter so much – they are the heuristics we use to choose something to watch on Netflix & YouTube. Instead of deeply analyzing the story, titles, reviews, content, cast, thumbnails, previews, cast, etc., we just use 1 or 2 of those parameters and make our decision to watch or not.

This high energy-consuming nature of the brain gently guides us to save energy, and one efficient energy-saving method is outsourcing thinking to Artificial Intelligence.

Thinking is a very energy-consuming task. So naturally, AI becomes a brain-energy-saving mechanism when we outsource our thinking to AI. Share on XSmart, Dumb, or Artificially Smart?

Now, we’ll extend the meme-cognition outsourcing and consider how we are outsourcing our productivity & intelligence & thinking to AI.

Enough people on the internet have feigned their intelligence by using AI to write information. Here are some stats from ahrefs[4], an authority in the blogging & social media market. 65% bloggers use AI in some capacity (from ideation to refining content). 21% write first drafts of their content using AI tools, and 3% write complete drafts (chatGPT and its countless different applications built on top of it).

A study by Amazon Web Services[5] suggests a shockingly 57% of the internet’s contents are translated by some level of AI.

A survey by Github[6] says 97% of developers have used AI assistance for work. This says something. It gives credence to the idea that “AI won’t take jobs away; people who use AI will.“

But on the flip side, there are people like me. People who can barely code can produce usable code by asking AI to do it with just a few lines of what the code should do. So much so that I have to keep looking back on the basic syntax I learned years ago. But in all fairness, I’m not a developer.

My productivity seems more intelligent because I outsourced the execution of my thinking to AI. In all fairness, prompting well is thinking, but it isn’t enough if I can’t convert it into anything meaningful (workable code).

We see one more evidence of it. Countless AI influencers sell prompt engineering courses. Prompt engineering is essentially structuring your prompt (the message/instruction) in a way that brings out productive outputs. Many of these courses are essentially just a quick explanation of how ChatGPT works and dozens of copy-pastable prompts to using while chatting with any conversational AI (chatGPT, perplexity, co-pilot).

Copy-pastable. This means that the courses are selling what to type to make the AI work, which already does most of the work. They are offering copy pastable messages like – “Write me a sincere apology email because I couldn’t meet a deadline. I was ill, and I couldn’t look at a screen.”

Such prompts are themselves outsourced cognition (just like the memes), on top of the email that is automatically written by the AI – it’s typo-less, clear, positively worded, appears genuine, and all you needed was “intent”. These are 2 layers of outsourcing cognition. From the energy-saving point of view, this is maximum energy savings. But I’d like to ask – at what cost? From just an intent to copy-pasting to AI doing its thing, we can show intelligent behavior.

Before we blatantly say this copy-pasting is itself insincere, consider this: Observational learning (when we learn by imitating others) is behavioral copy-pasting. It’s more energy efficient.

Now, there are 3 outcomes from this.

- AI is making us smarter by showing us what to do.

- AI is making us dumber because we don’t have to refine our skills.

- AI is making us appear & feel smart with its output, even though we are incapable of making that output without AI.

(there’s a 4th hidden one, which I will speak of later in the article)

All 3 are valid outcomes, and they are mostly determined by what you want to be.

1. Smarter: AI becomes the coach.

AI can make you smarter if you choose to learn. Classic theories, like the zone of proximal development, say that if the gap between what you can do and what you can do with some help is small, learning maximizes. I can start dumb, see how AI does it, have enough knowledge to understand it, and learn by emulating what the AI did.

2. Dumber: AI becomes the lazy way out that prevents us from maintaining our skills.

AI can make you dumber if you stop using your skills. E.g., you start off as a writer and then let AI do all of it only to find out that your ability to form good sentences is dead.

3. Artificially smarter: AI becomes the ghost in the shell that is the human; we voluntarily become the marionettes controlled by the AI.

And finally, AI can make you fake your intelligence, knowledge, or analysis by doing the hard mental work on your behalf. This is when you’ve truly outsourced your thinking to AI. E.g., I could publish an innovative recipe without knowing anything about cooking. Or I could win at chess by just following AI recommendations without knowing what to do. I just follow – monkey see, monkey do. Humans become the symbiote living the life of AI.

- If the goal is to do smart things and not actually be self-sufficiently smart, AI will be the god of us.

- If the goal is to learn at an accelerated pace, AI is right there to help.

- If the goal is to get passive & lazy, AI might be the most useful thing we’ve created.

Let’s go back to the outsourced cognition bit. I suspect most of us are doing the efficient and easy thing because AI is the new fun toy to play with. It has become the mother of all shortcuts. And if it makes us feel smart and get more work done, why not?

But there will soon be a time when AI is with us, and it is no longer special. Just like memes. At that point – you’ll have already outsourced your cognition to AI, which potentially leads to a crisis.

The question is – do you reach artificial brain rot or not?

The AI symbiotic crisis

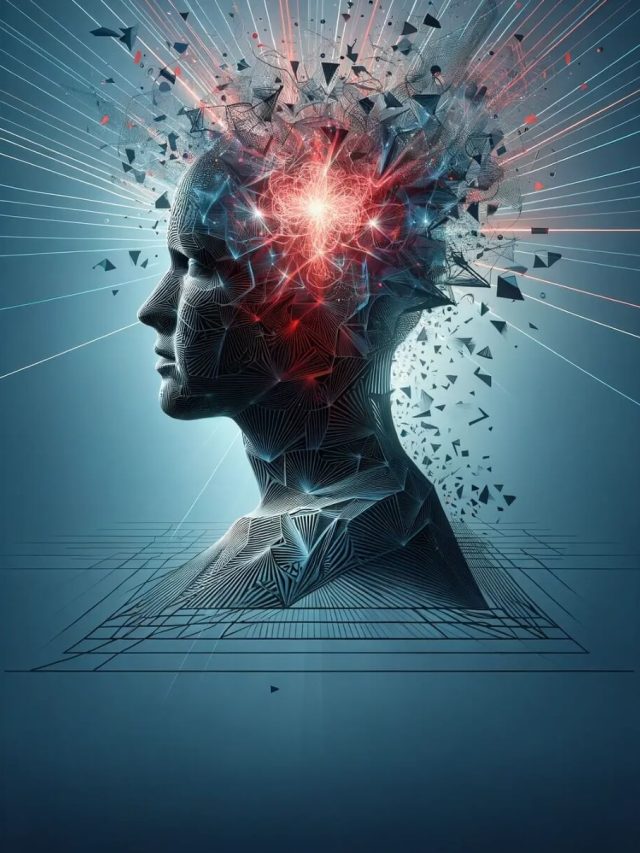

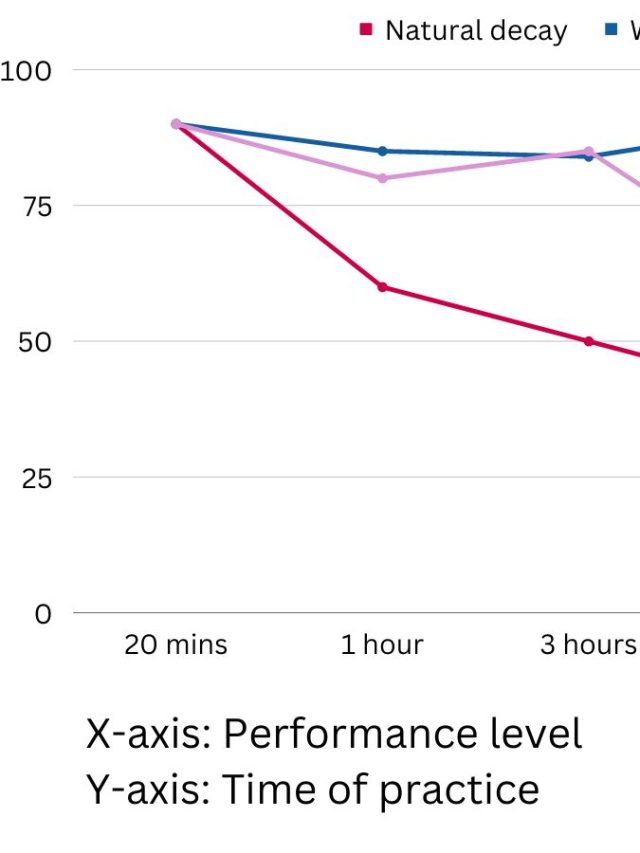

This artificial brainrot is actually a technical event in the age of AI – it’s called “Model Collapse“. Model collapse occurs when AI itself continues getting trained on degraded AI content and degraded data on the internet, and eventually, the AI starts spitting out gibberish because its trained on noisier data.

Researchers describe this as a degenerative process[7] where generative AI creates content, which eventually dominates the internet and thereby becomes the AI’s future training data, and it continues getting trained on its own output instead of better, more sophisticated data.

Ask for good output –> Get less good output –> Publish less good output –> Train AI on less good output –> continue –> Get mediocre output –> Publish Mediocre output –> Publish more mediocre output with more errors & hallucinations –> Train AI on mediocre output —> continue –> Get bad output.

A human element makes this even worse – the AI degrades from really good to really bad because, at every step of the training, another problem occurs:

- The Human using AI as an informational source & quality check fails to realize what’s good and bad information, and lets the bad pass into the system because the human has lost judgment and cannot be trusted on informational accuracy.

- No more studying, no more fact-checking, blindly trusting AI, blindly accepting information, not thinking critically, etc., will be behaviors that make humans lose this judgment and aggravate the model collapse.

When this cycle continues, there is a steady, noticeable decline over time, and both – the human & the AI – start incrementally lowering their standards without realizing it.

I call this the AI symbiotic crisis – where humans and their AI tools feed off each other into degradation.

We are going to have to really think and assess our values as a human and choose which of the 3 paths we want to take – get smarter, get dumber, or get artificially smart.

In no reasonable capacity would I think that we’d all want to achieve artificial brainrot on purpose, but welp, we are known to be a self-sabotaging species at times.

Being artificially smart isn’t necessarily a bad thing. At best, it’s like upskilling or getting a new tool to do a job better and still feel smart and good about ourselves. At worst, it makes us a hack-job. If being artificially smart serves the end goal of getting work done, there isn’t a real problem. The work gets done anyway. A person (or person with AI) is paid for that work.

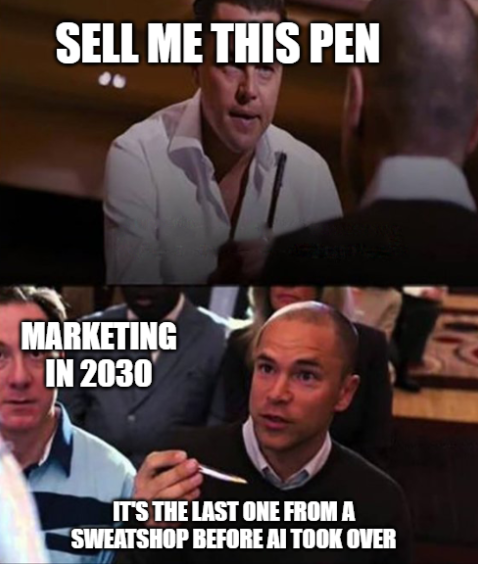

Here’s my attempt to show all 3 outcomes – AI makes us smarter vs. dumber vs. artificially smarter – in meme format.

A fully useless meme template just dropped. I asked ChatGPT 4o to make it with the prompt “Make a meme template and make it really look like an AI did it.”

I usually put some thought into the memes I make for the content on Cognition Today. So by doing this, I’m no longer maintaining my skill or even thinking (got dumber). But I learned about this 3 layered format that loosely captures cognitive dissonance visually (got smarter). And I can fake my intelligence by saying I created this whole new meme template (got artificially smarter).

This attitude begs a bigger question. Where are we headed as a species?

I don’t think AI will make us stupid enough to make the sci-fi fantasy idiocracy a documentary and a history chapter. But…

Our evolution

We are homo sapiens sapiens. We are supposed to be very smart because: We have a big, dense brain. And, we live in a society with co-operation. We share skills, build things, and engage each other. The big brain and the social element got us to manipulate the environment and engineer our way to the top of the food chain.

With AI. I don’t see we have a competitor. Let’s consider it as a part of the big brain and also a part of the social environment. Now, everything we do will have AI in it. Perhaps like a needy pet or a subservient tool we depend on. That means we become… a symbiotic species with AI, a mutually beneficial one. Like the smooth-billed ani that eats ticks and parasites from the fur of a capybara in their mutually beneficial symbiotic relationship. The capy gets a bro and stays tick-free, the birb gets food.

Let’s call our AI symbiotic level-up Homo Technus. Who gets to be the capybara, you decide.

Maybe the question of whether AI makes us smarter or dumber is pointless. We may just become psychologically symbiotic with AI as a new step in our species’ evolution – welcome, Homo Technus.

We are entering a symbiotic relationship with AI as the next step in our evolution. Share on XSources

[2]: https://psycnet.apa.org/buy/2007-00654-010

[3]: https://bmcbiol.biomedcentral.com/articles/10.1186/s12915-020-00811-6

[4]: https://ahrefs.com/blog/blogging-statistics/#blogging-ai-statistics

[5]: https://arxiv.org/pdf/2401.05749

[6]: https://github.blog/news-insights/research/survey-ai-wave-grows/

[7]: https://www.nature.com/articles/s41586-024-07566-y

Hey! Thank you for reading; hope you enjoyed the article. I run Cognition Today to capture some of the most fascinating mechanisms that guide our lives. My content here is referenced and featured in NY Times, Forbes, CNET, and Entrepreneur, and many other books & research papers.

I’m am a psychology SME consultant in EdTech with a focus on AI cognition and Behavioral Engineering. I’m affiliated to myelin, an EdTech company in India as well.

I’ve studied at NIMHANS Bangalore (positive psychology), Savitribai Phule Pune University (clinical psychology), Fergusson College (BA psych), and affiliated with IIM Ahmedabad (marketing psychology). I’m currently studying Korean at Seoul National University.

I’m based in Pune, India but living in Seoul, S. Korea. Love Sci-fi, horror media; Love rock, metal, synthwave, and K-pop music; can’t whistle; can play 2 guitars at a time.