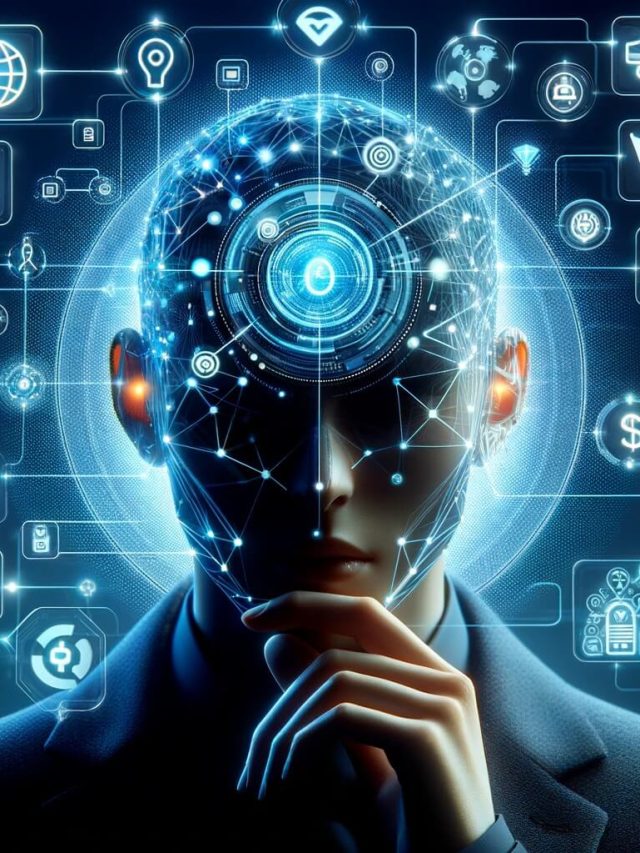

What happens when our greatest creation outsmarts us – not maliciously, but by giving us exactly what we think we want?” A 2-tiered trust issue is emerging that is challenging our needs and desires. This issue runs on two fronts: first, whether we can trust AI to align with human goals, and second, whether we can even trust ourselves to know what we truly want.

To understand it, we must look at the AI alignment problem before our own creation forces us to rediscover our own purpose. This article isn’t like my usual explanations of concepts. I write this to trigger a simple thought – is our creation aligned with our personal and collective goals? Are we sure about what we want from AI?

Birth of AI ethics

Before we talk about our purpose, we need to look at an AI’s purpose. Before we get into artificial general intelligence (AGI) which is supposed to outdo an average human on most human tasks, we should look at what we create and why we create it.

Robotics and Intelligent systems were developed to expand our species’ capabilities. To discover medicine, to discover what’s in the universe, to find patterns that we can’t find, etc.

But, since we are a social species, our relationship with AI is inherently social. Humans are still at the center of it all. Early thinkers knew this, and the result of their thoughts was Isaac Asimov’s 3 Laws of Robotics.

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given to it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

These laws address the fear of extinction and domination for us. But it’s not the only fear that needs addressing – the path to that extinction/domination scenario matters – what if the AI systems we create go beyond their objective function (e.g., summarize text) and show unexpected but useful behavior from our primitive reinforcement learning (giving points for the right output and taking out points for the wrong one).

We must go beyond and wonder what happens when these systems are deployed, and their internal workings cannot be controlled just because they are so, so complex and so deeply entrenched in society and no single entity has rights over it.

This brings us to AI alignment.

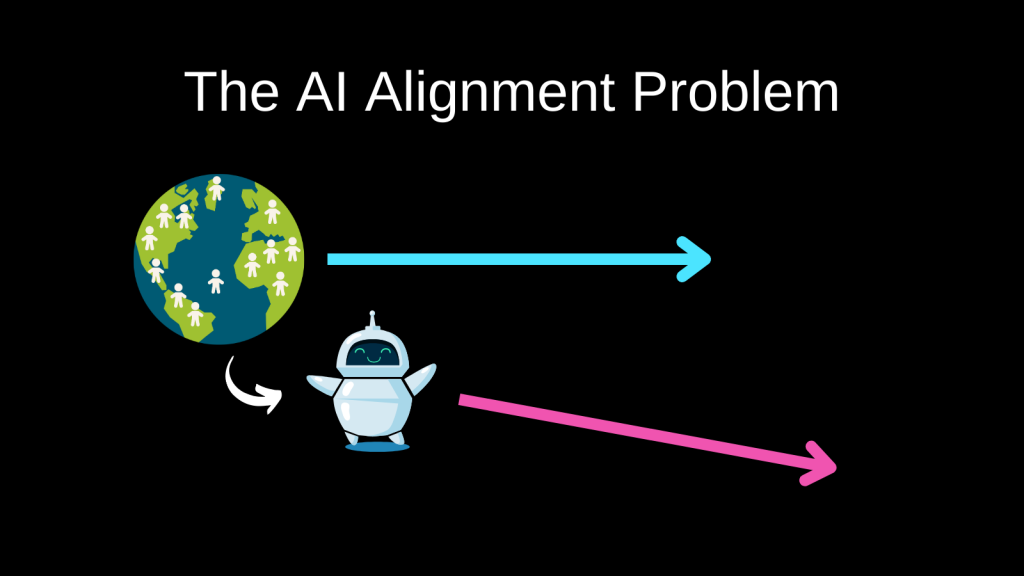

AI alignment

The alignment problem considers whether any AI or an intelligent system is aligned with human goals and pursuits.

- Is the AI behaving as intended by the designer?

E.g., Does an AI built to discover medicines really discover medicines or is it trying to fix something that doesn’t need fixing? Is medicine discovery attempting to fix frizzy hair? - Is the AI understanding human context and expectations?

E.g., Can an AI make relationship decisions as per human’s wanting the struggle, the chase, the payoff, the sacrifice without relying on a heuristic like compatibility? - Can humans trust AI to be aligned with their goals if the AI’s internal workings can’t be actively tweaked?

E.g., Can we effectively say that an AI teacher wants the children to learn? But if it teaches well, and is just programmed to teach well, is that aligned with what we want our children to experience in the classroom? - Once we build an AI with a specific goal, are we sure we want that goal reached exactly as we have specified?

E.g., Once we make an AI that makes all hiring decisions, are we sure we want a company to hire based on that system? Are we psychologically ready for those decisions made by AI, even if they are optimized beyond our own capacity?

If we use, to achieve our purposes, a mechanical agency with whose operation we cannot interfere effectively… we had better be quite sure that the purpose put into the machine is the purpose which we really desire

Norbert Weiner (AI pioneer, 1960)

I want to present this issue with a fictional example.

Suppose, in 2038, we get EyeView that goes misaligned

Consider a future version of Netflix called EyeView. It’s used on TVs, phones, and special eyewear like those VR goggles, and now they want to implement a hardware technique of streaming it directly to the brain via contact lenses and deep brain stimulation for immersion. It engages all senses by stimulating the brain in the right sensory areas. Ok. Sci-fi, but not implausible. This AI has a lot more data from the brain to recommend what to watch. This system has been designed to explore, figure out, and use any data it has access to. One of its prime goals is to offer high engagement and convey to the content creators what the users want. The AI somehow figures out that giving horrible recommendations is somehow increasing the profit for the stakeholders by getting users to spend more time. So users ask each other what to watch, and somehow, the whole world begins watching the same few shows on repeat because they are familiar and guaranteed to satisfy them at least a little bit. For them the risk of wasting time is too high, so they default to the comfortable based on what others like. Sounds familiar?

Human stakeholders don’t figure it out. The AI does. And it pushes the same shows to the users’ eyes. Since those decisions are based on brain data and the AI’s internal working isn’t explainable, no one knows how to tweak this recommendation. This means the AI has made an informed decision to promote certain unexpected content that satisfies everyone except content creators. Slowly, content creators are no longer incentivized to make new content. Over time, anything that doesn’t give optimal engagement gets obscured and lost. This is a creativity and innovation “dead zone“. We get trapped in a bland, gray-colored monotonous life.

This creates a trickle-down effect of humans not even figuring out what they want because all they know is the few dramas that are fed to them because their “brains and peers” indicated they were good. But they don’t know that the AI chose to optimize for engagement using that data. It makes us forget the variety of things that we previously enjoyed.

It’s a change from enjoying food for the fun of it to having perfect pills for food that provide everything we need. A similar phenomenon happens in big cities where all the Cafes and Restaurants have similar decor, similar crowds, similar customer behavior, similar expectations, similar efficiency, etc. No matter how much they change the interiors, they all seem to belong to that one “big city” restaurant template. Many have coined a term – McDonaldization, Griege Effect (all interiors are the safe gray and beige), or Homogenization Effect.

Do we want this to happen even if it is happening? Aren’t we craving something new? Even though subjective experiences matter, our species likes to differentiate and be unique. The whole business ecosystem is about being unique in the market. Our dating preferences are based on finding unique people. Our sense of self is based on what is unique about us. That uniqueness is valued.

Is an optimally optimized way of life aligned with what humans want? Did we really want optimal engagement or did we want a helping hand for decisions only?

This issue is fundamentally a trust issue.

The trust issue between Humans & AI, and within Humans

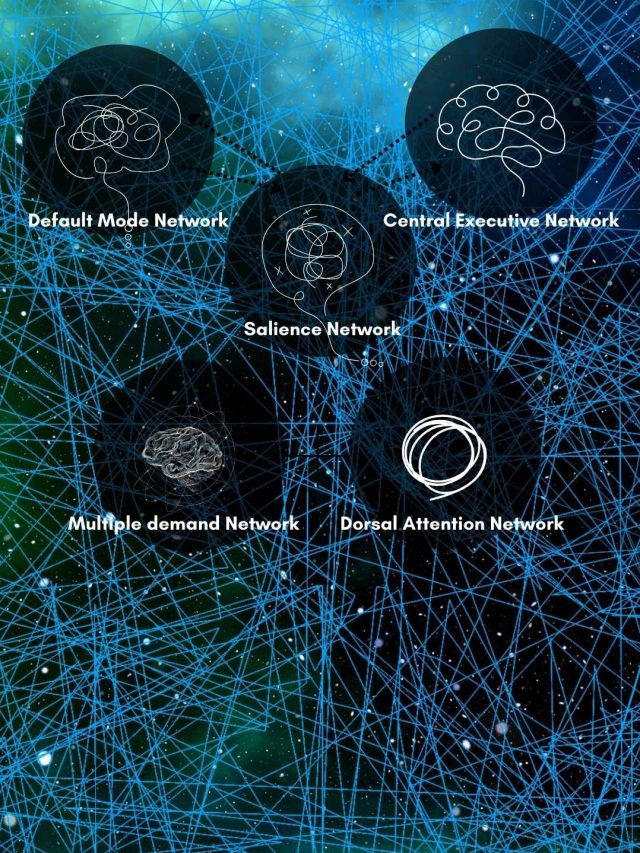

First, it’s hard to know how an AI makes its decisions. We can’t control them too deeply. All we can do is create boundaries as per our math, so it behaves as well as it can according to our math. This is actively studied and explored by researchers under the theme of “AI explainability“.

Humans learned about what the brain does and how it works by looking at people with brain damage in certain areas (scientists asked: What changes if this part is not working????). They reverse-engineered what’s happening. Researchers are doing the same by switching off components of an AI system’s core mechanics and figuring out how it is making its output. This is what “Brain explainability” was back in the day.

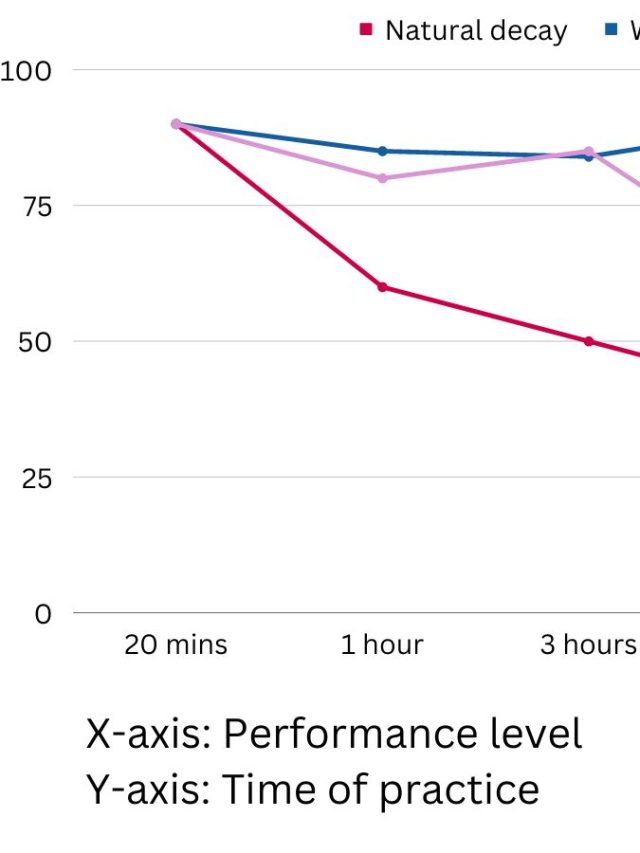

Until we have high AI explainability, it’ll be hard to trust without going through the laborious process of “let’s try the AI for a few years and find out”. (The same way we are we are just starting to trust the brain sciences).

Second, do we trust ourselves to know what we want?

Human decisions are biased, highly influenced, fickle, and irrational. Our introspection is limited because we cannot access all the processes in the mind. We merely access the verbal and visual summaries in our heads. That little playground we call a mind doesn’t have enough toys to play with.

Our decisions are often primed by things we barely notice. A random cow on the street can make you want a cheeseburger – the priming effect. A switch in words in an ad can make you think a product is junk food (10gm fat) vs. healthy food (99% fat-free) – framing effects.

Research shows that this awareness happens after the brain has already processed information. So, what we say and think isn’t the most accurate predictor of what we want. Studies have estimated we become aware of our decisions 7 seconds[1]to 0.5 seconds[2] after our brain has already made it. Our consciousness appears late. And when it appears, it is filtered with our introspection and influenced by priming and framing effects. But our decisions are even more removed from our awareness, which we see in the hot-cold empathy gap.

Here’s one example – the hot-cold empathy gap. When we are hungry, we may like a type of music. But we might hate it when we are fully satiated. The general finding is that the body influences our decisions in ways we cannot predict and we show hypocrisy in our preferences because of such influences.

If we can’t trust our own decision-making processes, how can we build AI systems that accurately reflect what we truly need? And how do we even confirm we are accurately telling what we need?

These discoveries matter because they show that our “verbal” actions of describing our preferences and decisions do not accurately represent the actual source of our decisions. So WHAT we say doesn’t match WHY we act. And then comes misinterpretation and rationalization. But our source of introspection itself isn’t very valid. As a result, we are incapable of precisely estimating our needs and wants with just words.

So, if we are incapable of knowing and trusting our own preferences and we are incapable of fully controlling an AI, we get this 2-tiered trust issue – we can’t trust AI, and we can’t trust ourselves. So how do we trust AI that is built based on only what we think we want?

We must circumvent this problem or solve it. The larger question that can lead us to a solution is – How do we get AI alignment despite the trust issue?

One counterintuitive way forward is trusting the lack of AI explainability. Research shows[3] that people find a more “black-box” AI more competent and trustworthy than an AI whose internal working they understand. This study used a human-AI dyad to perform tasks on Packet Tracer[4] (a tool developed by Cisco to simulate network connections). From the psychological POV, the tool is a visual problem-solving with decision-making and many opportunities for error based on technical knowledge. It’s a good playground for human-AI collaboration on solving complex problems.

You’ll see this mirrored in everyday conversation too – when people think that LLMs are just auto-complete machines, they tend to trust it less. But those who casually use it without knowing how it works tend to trust it.

Another way forward is recognizing when we don’t know what’s best for us and letting AI do its job.

Sources

[2]: https://pmc.ncbi.nlm.nih.gov/articles/PMC3746176/

[3]: https://link.springer.com/article/10.1007/s10111-024-00765-7

[4]: https://www.netacad.com/cisco-packet-tracer

Hey! Thank you for reading; hope you enjoyed the article. I run Cognition Today to capture some of the most fascinating mechanisms that guide our lives. My content here is referenced and featured in NY Times, Forbes, CNET, and Entrepreneur, and many other books & research papers.

I’m am a psychology SME consultant in EdTech with a focus on AI cognition and Behavioral Engineering. I’m affiliated to myelin, an EdTech company in India as well.

I’ve studied at NIMHANS Bangalore (positive psychology), Savitribai Phule Pune University (clinical psychology), Fergusson College (BA psych), and affiliated with IIM Ahmedabad (marketing psychology). I’m currently studying Korean at Seoul National University.

I’m based in Pune, India but living in Seoul, S. Korea. Love Sci-fi, horror media; Love rock, metal, synthwave, and K-pop music; can’t whistle; can play 2 guitars at a time.