There are cognitive biases and thinking errors. Our brains have evolved to have tendencies that, sometimes, and unfortunately, lead to errors. Our perception is compromised, our decisions are based on wrong or partial information, our reactions are based on a tiny slice of information. But, there are things you can do to counter biased thinking.

These could be errors in how the brain absorbs information – interpreting conversations, reading articles, understanding behavior, understanding others’ motivation, rights & wrongs. Cognitive biases are errors in thinking and perception that make us think in a particular way even when it is inappropriate or wrong. They systematically warp our perception of reality. Cognitive biases emerge from the heuristics our brains acquired through evolution.

The brain has evolved quick tendencies for a reason, but many of them are out of context today. For example, jumping to conclusions such as – ‘the food is toxic based on its color’ may have been useful back in the day, you know, a million years

These ‘heuristics’ lead to highlighting day-to-day information in unproductive ways. Especially when there are inherent tendencies about oneself or others. If there is a tendency to be self-critical, you might interpret social cues in unfavorable ways. You might interpret a lack of party invitations as not being cool enough to hang out with the group. It isn’t easy to counter such thinking, and quick fixes are not likely to work[3].

The advantage of having such tendencies to jump to conclusions is SPEED & EASE of decision-making. However, speed compromises accuracy here. Biology is a product of evolution and not design. There are optimizations. We didn’t get both speed and accuracy like a computer by default.

A short recap of cognitive biases

Some conclusions are more likely to be wrong than right. They confirm beliefs that we already have by selecting bits of information and giving those bits undue importance. This is the confirmation bias. We notice and remember information that confirms our belief and ignore or dismiss information that goes against our belief. It is the queen of the biases[7].

There are other biases[8] such as the gambler’s fallacy– we somehow believe that the world likes to balance itself out. If you toss a coin 5 times in a row and get the result heads every time, what do you think the next toss would yield? Heads? Tails? Most people believe that it would be tails. This is wrong. Previous coin tosses have no causal relationship with the next toss. They are independent events. People make this error of thinking that when something happens a lot, the opposite will be true in subsequent events. These errors lead to heavy monetary losses in gambling.

Another pervasive bias[9] is the anchoring effect. Nobel Laureate Daniel Kahneman and his colleague Amos Tversky conducted an experiment (Kahneman, Thinking fast and slow 2011) in which they asked people the following question – What percentage of African countries are a part of the United Nations? Two equal groups were created. One group was asked – Is it greater or lesser than 10%? The other group was asked – Is it greater or lesser than 65%? The first group answered an average of 25% and the second, 45%. The 2 questions included an anchor – 10% and 65%. These numbers gave a starting point for people to think around, follow up with assumptions, and then give an answer.

One powerful source of persuasion is deliberately biasing someone’s perception using the “framing effect“. When an idea is converted to words with some context and details like color, culture, font, accents, timing, etc., a “frame” is created for the idea. The most common framing is portraying something as a loss or gain. But there are other frames too – cultural frames, emotion frames, identity frames, etc. “This sanitizer kills 99% germs is a gain frame” which highlights the positives compared to the less persuasive “this sanitizer only fails to kill 1% germs” which highlights the negatives. Rewording something with urban lingo to appeal to a gen Z crowd would be an identity frame. Using faces to show happiness or sadness would be an emotion frame. Frames change how we interpret the core idea.

There are over 100 cognitive biases. I highly recommend reading this book[10] and this book[11] to learn more about them; you won’t be disappointed.

I’ve listed a few strategies that will counter some of the “parent” cognitive biases like the confirmation bias, the interpretation bias, survivorship bias, anchoring bias, and the framing effect.

8 strategies to think clearly and objectively: How to overcome thinking mistakes that we make

We have a powerful multi-purpose instrument called the brain which can be trained with just little practice. To overcome thinking errors, you need to let go of many assumptions, and learn to accept a new assumption: you may have already missed meaningful information in your perception, and you have to put in the effort to fill in the missing pieces. The causes could be your bias, pure logic, or consequences of how things work in the world.

1. Focus on the data: In any situation that demands decision-making, focus on the evidence or information. Even the bad kind. Data might be hard to spot, but can be figured out. Just takes a little bit of effort. Once this becomes a habit, it’s nearly effortless. However, the anchoring bias occurs because the information itself biases you[12]. For that, consider the opposite information and see how it makes sense.

2. Seek out contrary data and conclusions: Keep an eye on bad reviews and see if they matter to you. One hundred good reviews are great, but a hundred good reviews and a few bad reviews are better. This is your best weapon against confirmation bias. This is perhaps the most important technique in this list as well, if there is data that supports a notion, find data that doesn’t; or at least try to think in that direction. If you google “Is XYZ good?”, also ask “Is XYZ bad?”. You’ll have a much clearer picture of everything. And I really mean EVERYTHING. In fact, this is at the core of scientific investigation. This is how accurate knowledge builds.

3. Understand the noise: Focus on important aspects of a problem, not every single aspect. It is hard to filter out the noise but let me show how noise is useful when avoided correctly[13]. Noise is background information that is of no use to you. Suppose you have to read a huge textbook for an exam. How do you know what to focus on? A lot of the book’s information might be useless for the exam, but you might feel it is important because it is in the textbook. Once you know what your exam is all about, you can learn to predict what seems important and what IS important. But, the additional information in the book could be a gateway to explore and figure out what is important. So noise is needed to understand the signal and then separating the two can help. An expert may tell you what to leave out and ignore, but you might not become an expert until you learn to identify the noise.

4. Test and Re-test: Consider the following example. You are talking with a friend, and he is not friendly. You wonder why and think that perhaps your friendship is changing or he had a bad day, or you said something unpleasant. Instead of drawing such conclusions, test and retest. Try having a similar conversation again or perhaps ask how his day was. Perhaps you are concluding that your boss is cranky on Wednesdays. Don’t just test this hypothesis for Wednesdays, test this observation for all days. Maybe your boss is always cranky, or it was random crankiness – work stress? This is tricky; because, if done wrong, you walk right into the confirmation bias.

5. Make educated guesses: Look for anchors. People ask leading questions that contain information that primes

6. Avoid misattributions: Sometimes, we get attracted to advertisements based on things unrelated to the advertised products. There are images that evoke emotional responses. Try to isolate that emotion. Its purpose might be to compensate for the lack of useful content or amplify a desirable feature. We often misattribute emotions[14] to a false cause despite the presence of a true cause. Let us look at mobile applications. Sometimes great restaurants make terrible apps and reviewers rate the app for what it is – an app. When you see a low rating, can you analyze if the poor rating is for the food or the app? Even though an app sucks, the food can be good, but the app warrants a low rating. We misattribute this and assume the food is bad. Is the food bad because the app sucks? No. The food could be brilliant AND the app can suck, the app shouldn’t change the perceived quality of the food.

Similarly, your decisions about clothing and appliances will be different when hungry or fully satiated. Only because the decision is influenced by hunger even when hunger is unrelated. In general, your bodily states like feeling warm/cold, hungry/thirsty, sexually aroused/sleepy, etc., will influence decisions but your brain will misattribute that influence to a product you want to purchase.

Another misattribution is called the “self-serving bias” – we take credit for victories and blame others or circumstances for failures. This bias enhances our own effort and importance and avoids taking responsibility for anything that doesn’t make us look good. You may be doing this when you don’t perform well or get negative feedback. It is easier to blame others than accept that you might need to work on your skills or communication. That is the bias.

7. Pretend to be someone else: Every person is biased in some way, through their experience, their body, their identity, their circumstances, their learnings, etc., So to counter your bias, you can adopt someone else’s bias. Traditionally, this is called empathetic perspective taking – you evaluate something based on how someone else you know will evaluate it. Our brain creates a model of people we meet. When you pretend to be someone else, you adopt that model. And with that model, new perspectives emerge for you to bias yourself in new ways. This is a tip to take on new perspectives when doing so becomes hard because of your bias.

8. Assume you don’t know what you don’t know: In many situations, it is impossible to understand the clockwork that leads to a phenomenon. Let go of assumptions. Accept that there are factors at play that could be beyond your comprehension – The

Countering the framing effect

The framing effect is robust, but it can be countered by listing pros and cons of options[15] presented with multiple frames. For example, if a tablet is presented with a positive frame like “9 out of 10 patients feel no side effects” vs. a negative frame like “ Only 1 out of 10 patients feel side effects,” the first frame might encourage a purchase but the second one might not. That is when the framing effect transforms equal options into unequal options through verbal cues that represent a gain vs. a loss. Using a pros and cons technique to evaluate both options – “pros: 90% good, cons: 10% bad” – can effectively cancel out the framing effect and both options are considered equal. One explanation for this is that the pros and cons method de-biases the framing effect by bringing attention to the actual information in one single “100%” or “whole” and pulling attention away from the individual frame. So information used to evaluate the 2 options is transformed and reconceptualized in one’s working memory, which becomes the new reference to evaluate options.

In a study[16], researchers saw that instructing participants to “think like a scientist” as opposed to “rely on gut reactions” reduced the impact of framing effects. This shows how the framing effect essentially acts as a heuristic to simplify decision-making by basing decisions on quick intuitive responses to presented information (primary information + framing cues).

The framing effect can vanish[17] when options are presented in a foreign language, regardless of positive/negative or gain/loss framing. One reason for this is that a foreign language is harder to process than the native tongue, so it needs deliberate thinking which reduces the influence of automatic thinking triggered by the frame. A foreign language also provides psychological distance which helps process information more abstractly and globally which pulls attention away from the specifics of a frame.

The benefits of overcoming cognitive biases and thinking errors

Short answer – better thinking, decision-making, and perception.

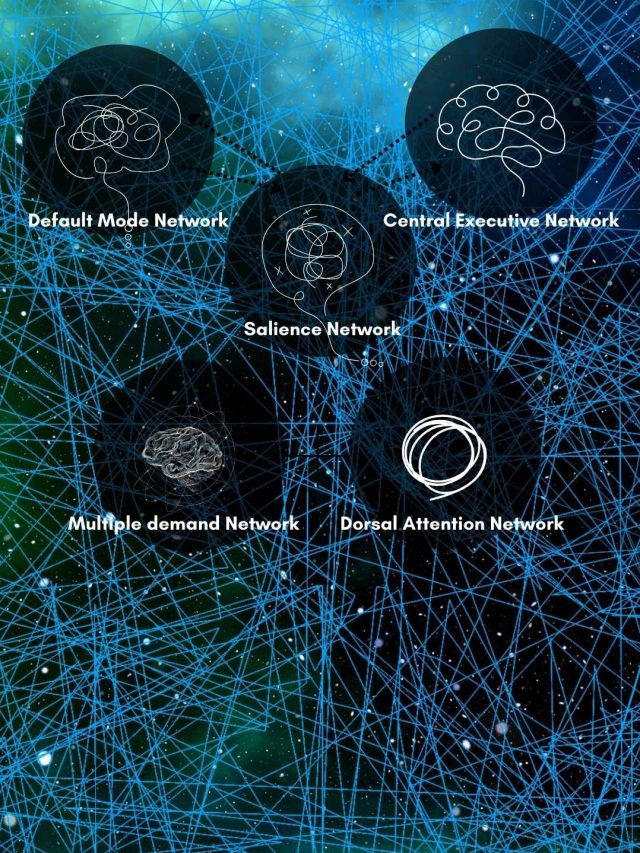

Longer answer – Humans have advanced, technologically & socially, largely due to the pre-frontal cortex and the frontal lobe which give us executive functions. Executive functions guide decision-making, planning, problem-solving, complex analysis of situations, etc. Cognitive biases interfere with these functions. For example, experts can fail to see good, novel solutions to problems or people could feel their superiors at work perform poorly. By bringing these errors into awareness and mitigating them, you will process and understand the information around you better. Simply by acknowledging the Survivorship bias, you can avoid bad productivity advice and shield yourself from bad success stories. You will know how to make better decisions in stressful and relaxed situations alike. You will shop better, manage your resources better, and have healthier conversations with lesser misunderstandings. Personal, professional, and social interactions will significantly improve as you will

Now, I suppose, you’ll have a few strategies in your quiver to make good decisions by overcoming cognitive biases. Have fun thinking objectively!

Sources

[2]: https://doi.apa.org/doiLanding?doi=10.1037%2F0022-3514.39.5.752

[3]: https://www.sciencedirect.com/science/article/abs/pii/S0005796712000460

[4]: https://psycnet.apa.org/buy/2014-04862-001

[5]: https://www.sciencedirect.com/science/article/abs/pii/S0005791615300501

[6]: https://www.sciencedirect.com/science/article/abs/pii/S1364661397010929

[7]: https://journals.sagepub.com/doi/abs/10.1037/1089-2680.2.2.175

[8]: https://onlinelibrary.wiley.com/doi/abs/10.1002/bdm.676

[9]: https://www.sciencedirect.com/science/article/abs/pii/S1053535710001411

[10]: https://amzn.to/2IkgFOX

[11]: https://amzn.to/3ot7viC

[12]: https://www.sciencedirect.com/science/article/abs/pii/S0023969015000739

[13]: https://www.sciencedirect.com/science/article/abs/pii/S0022249609000224

[14]: https://journals.sagepub.com/doi/abs/10.1177/0146167210383440

[15]: https://www.sciencedirect.com/science/article/abs/pii/S073839910700434X

[16]: https://academic.oup.com/psychsocgerontology/article/67B/2/139/537897?login=true

[17]: https://journals.sagepub.com/doi/10.1177/0956797611432178

Hey! Thank you for reading; hope you enjoyed the article. I run Cognition Today to capture some of the most fascinating mechanisms that guide our lives. My content here is referenced and featured in NY Times, Forbes, CNET, and Entrepreneur, and many other books & research papers.

I’m am a psychology SME consultant in EdTech with a focus on AI cognition and Behavioral Engineering. I’m affiliated to myelin, an EdTech company in India as well.

I’ve studied at NIMHANS Bangalore (positive psychology), Savitribai Phule Pune University (clinical psychology), Fergusson College (BA psych), and affiliated with IIM Ahmedabad (marketing psychology). I’m currently studying Korean at Seoul National University.

I’m based in Pune, India but living in Seoul, S. Korea. Love Sci-fi, horror media; Love rock, metal, synthwave, and K-pop music; can’t whistle; can play 2 guitars at a time.

Bro.. The first item here says focus on data. That’s true, but don’t you think sometimes we need to be really optimistic and have unrealistic belief to achieve success? Here I am referring to Upsc exams wherein data says 1 in 790 applicants gets selected, but those who got selected say they just had faith and never worried about competition. How to move ahead at such cross roads?

Hey, yes, focusing on data is important to overcome biases. However, it doesn’t mean one shouldn’t be optimistic. Both are compatible with each other. In your example of UPSC, it is good to be optimistic but it is also important to know what to expect.

The truth lies in a cognitive bias known as the survivorship bias – Those who were selected (survived) said that they had faith and never worried. But that’s only half the story. On the other side, there are those who had faith and never worried who also failed. Having faith and not worrying didn’t cause their success.

But that’s not it. Some people, in retrospect, downplay the negative emotions associated with a positive outcome.

In competitive situations like these, regulating emotions is important. Whether it’s the pressure, fear of failure, stress, anxiety, lack of confidence, disappointing others, etc. These aspects do affect performance. And sometimes, these factors hamper the preparation process too.

Regarding unrealistic beliefs, it’s not bad at all. It’s nice to dream and think big. But there is a point where over-optimism becomes a problem because overconfidence, lack of effort, relying on luck, etc. thinking big and high also requires some amount of strategy, thinking, healthy coping with failures, luck, good decisions, help, etc.

The optimism you are talking about sounds like a great idea to approach and take an opportunity. Many times, it is this optimism which puts people in the right place at the right time.