This is for those who want to create AI applications and conduct R&D to make better LLMs. I will introduce the idea of psycholinguistics in the context of LLMs because one of the most important “format of data” today is language, because that is how we talk with AI & People. Psycholinguistics reveals the weaknesses of LLMs that we can improve upon.

Technical post ahead.

Psycholinguistics

Psycholinguistics is a cross-over field[1] that incorporates linguistic analysis of language and psychological variables associated with language interpretation and perception. This approach to language doesn’t only focus on sampled language as a gestalt phenomenon[2] (holistic understanding of whole passages) or reductionist elements like syntax and parts of speech[3]. It also focuses on aspects of language where[4] & how[5] humans pay attention (i.e., keywords), what they assume about the meaning[6] in sentences (i.e., metaphors), and how words are used[7] as per implicit and explicitly stated contexts (i.e., “a minute” suggesting time or size based on preceding or following words).

One of my first areas of text analysis was about a psycholinguistic property called concreteness. Concreteness is a measure[8] of how abstract or tangible the meaning of a word is. For example, an apple is a concrete word, but kindness is a more abstract concept. The abstractness or concreteness of a word predicts the degree to which people interpret the meaning correctly and also agree with others’ interpretations.

I say all of this to highlight the ambiguity in language and what humans actually go through to understand language within a context. For this post, I am going to highlight LLM’s blind spots to the psycholinguistics that occur naturally to us.

LLM models have traditionally utilized techniques like creating facets[9] and concept activation[10] to extract context from a prompt. Recent advances in AI models have improved contextual understanding with larger context windows for better contextual understanding. However, LLMs have multiple issues with context, such as the “lost-in-the-middle[11]” problem, where information from a large prompt is ignored during response generation. Another problem[12] is identifying critically relevant text within a document.

Let’s look at all 4 of these problems.

1. Creating Facets

Overview:

Facets are conceptual subcomponents or dimensions of a broader topic or entity. In LLMs, “creating facets” refers to the process of breaking down a complex idea or object into its constituent meaningful parts so the model can address each aspect more clearly. This helps in both generating structured responses and retrieving more focused information.

Example:

For the topic climate change, an LLM might automatically generate facets such as:

- Causes (e.g., greenhouse gases)

- Effects (e.g., rising sea levels)

- Solutions (e.g., renewable energy)

- Stakeholders (e.g., governments, corporations)

- Regional impact (e.g., Arctic vs. Tropics)

When answering a user query like “Explain the effects of climate change on agriculture,” the model can isolate and prioritize the relevant facet instead of addressing the entire topic broadly.

These facets generally emerge based on a broad understanding of what information a human is looking for. But unless a prompt specifies something like “Who are the main stakeholders of climate change R&D?”, there will be a lot of noise and irrelevant information given by an LLM.

Plus, there is a problem of not understanding a human’s information requirement – a human could ask a broad question like “what is the problem of climate change?” and the user may actually be thinking “what is the hurdle of solving climate change issues?” but the LLM interprets as “what are the consequences of climate change”.

In human conversations, this can be quickly understood through context that is created by a few contextual variables like:

Who are the 2 people? E.g., a professor & student, a climate change solution start-up founder focusing on solar power & an investor.

This information is implicitly telling both humans the direction of conversation, which LLMs don’t have. For that, more economical conversations need a way to understand these psychological variables involved because humans may not be that precise in writing their prompts, simply because we take context for granted, because that is what we are used to.

2. Concept Activation

Overview:

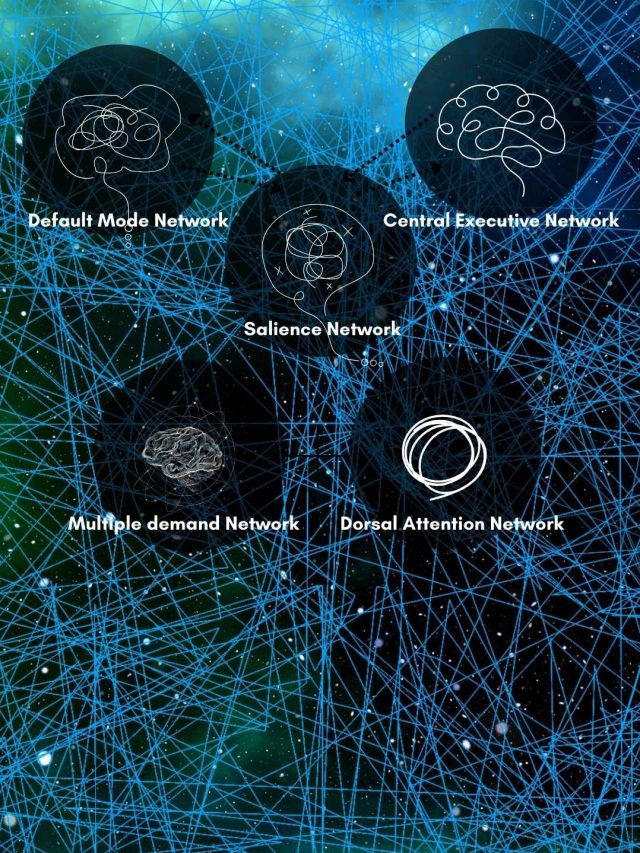

Concept activation involves internally “lighting up” neurons or attention pathways related to specific ideas or features. Inspired by neuroscience and interpretability research, concept activation in LLMs can refer to the process of stimulating latent representations of a concept within the model’s architecture when a prompt contains relevant cues.

Example:

When given the input: “A four-legged mammal that barks,” the model activates the internal concept cluster for “dog”—not because the word “dog” appears, but because it associates those features with the latent concept of “dog.”

A few days ago, a friend and I were talking about metal music, and he couldn’t remember a song. He asked GPT and couldn’t figure it out. He wasn’t sure which band it was, either. He kept thinking of the sky, and me being a metalhead, I casually asked him, “Are you talking about Ride the Lightning by Metallica?”.

That was his song. Concepts in my head were activated differently from how ChatGPT was activating them for him. Even though he gave us the same information, I had access to a more filtered bit of information based on the vibe of other songs we were listening to at that time. That biased my concept activation on implicit context.

We have to figure out a way so that AI can take different paths to activate concepts even if they don’t make semantic sense. But the first problem for this psycholinguistic enhancement is contextual cues, which emerge through observations and presence of mind for humans, but will emerge from a background data-feed for LLMs such as song-history, search-history, and background audio-capture.

3. Lost-in-the-Middle

Overview:

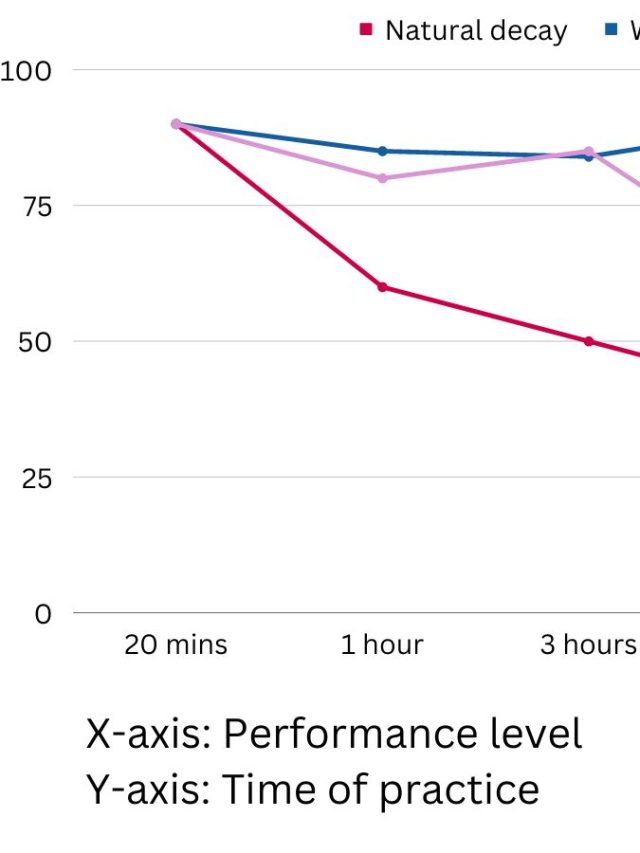

This refers to a context window limitation where information presented in the middle of a long prompt is more likely to be ignored or underweighted during response generation. LLMs show a primacy-recency bias, favoring information at the beginning or end of the prompt due to the way attention weights degrade across long contexts. This is the same problem with humans. But we have more such biases that extract a reasonably good bit of the signal in the noise – we are biased to extract emotion, faces, locations, people, actions, core ideas, philosophies, references to something familiar, etc. That makes us good “compressors” of information, even if the compressed output doesn’t represent the original as well as expected.

Example:

Imagine a 4,000-token prompt describing a legal case. If the crucial clause defining liability is buried in the middle (say, token 2,000), the model might skip over it or deprioritize it in the final summary or analysis.

This becomes a major issue in long-form document understanding or multi-document summarization, even with models that support 100k+ token contexts (like Claude or GPT-4).

Humans read a long document with certain expectations. These expectations guide what we consider as “signal” and what we consider as “noise”. Since AI is not reading a document with expectations like us (even if our prompt creates them), an LLM is likely going to miss things differently than us.

This aspect of psycholinguistics is about listeners anticipating information and prioritizing specific information. Generally, humans prioritize:

- Humans & emotions

- Locations and geographies

- Descriptions and Labels/titles

- The story aspect – how, why, where, when, and who happens.

These elements can be reinforced in LLMs and labeled as more important within the attention window. But there are more problems. While reading purely informational posts, humans behave in a different way – they are reading and updating information in their working memory and prioritizing things, and actively thinking. In this process, their priorities can change. For example, clause 4 in a legal document might suggest something that will lead the person to think about that clause while interpreting other clauses. This bias is an “exploratory bias” with which humans seek information. If a human were asked to summarize the document, this exploration bias would change what the summary is.

So, compared to AI, humans will summarize differently, not better, or not worse, just differently. That difference is about human judgment. This can be solved with prompt engineering, but it isn’t easy to estimate how a basic prompt like “summarize this document” captures essential details.

LLMs may be lost-in-the-middle, but humans are lost-in-many-places-but-not-in-the-important-ones.

4. Spotting Relevant Information

Overview:

This is the model’s ability to identify which parts of the input are most critical to answering a question or performing a task. While transformers apply attention to all tokens, this attention isn’t always semantically aligned with human relevance. Humans don’t do a data dump. Humans format data. Put them into sheets. Structure it. Title & subtitle it. Repeat things redundantly, because that’s how humans signal importance. That structure is inherently created to bring important information into awareness. An LLM can also learn this, but an LLM is likely to over-interpret and emphasize things for other reasons. Like the position of information in the text, or a mentioned keyword somehow aligns more with the LLM’s activated concepts.

Example:

Given a resume and a job description, if asked “Is this candidate qualified?”, a strong model should align years of experience, specific skills, and education. It should ignore irrelevant fluff like hobbies or generic phrases. But naive models might latch onto keywords without evaluating deeper relevance.

This is a very tricky problem to solve.

We have to make 1 assumption – humans aren’t the best judges to figure out the signal from the noise because this varies dramatically from novice to expert. And both novices and experts may find different valuable signals within the noise. Check the Einstellung effect to know more. An expert film director would easily know what is pure CGI and what is CGI-enhanced. But similarly, a novice may find a smart fix to a bug in code while the expert is stuck in their own head.

Unless we prompt sufficient information to an LLM, finding the signal in the noise is difficult.

Let’s take an easy example: Spot the N in MMMMMNMMMMMM. Humans have perceptual errors that make this difficult. LLMs don’t have those for text because embeddings are precise values that can be differentiated. For the sake of this article, this is not what I mean by finding the signal in the noise. Humans are currently valued because they don’t know what signals may exist. Take the screenshots on your phone. All those memes and embarrassing chats or gossip dumps from your bestie have signals – 1 or 2 important screenshots. While viewing, you may find it faster than LLMs because an LLM would likely summarize the whole screenshot dump as memes and gossip, just because they appear so frequently. The contrast is easy for a human to find.

Takeaway

In engineering better LLMs, there is a roadblock – a lack of data streams that bias an LLM’s processing of prompts. This creates a context deficit and an attention deficit. And, ambiguity about what a person thinks vs. what a person says in a prompt.

An AI psycholinguistics solution will need to involve signals from human thinking that translate into language for LLMs.

Sources

[2]: https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2021.649384/full

[3]: https://www.cambridge.org/core/journals/journal-of-linguistics/article/abs/partsofspeech-systems-and-word-order/5C9D621642E10F51607367DE39853681

[4]: https://link.springer.com/article/10.3758/BF03197383

[5]: https://www.sciencedirect.com/science/article/abs/pii/S0022537179905346

[6]: https://www.degruyter.com/document/doi/10.1515/cogl.1993.4.4.335/html

[7]: https://www.jstage.jst.go.jp/article/arele/21/0/21_KJ00007108619/_article/-char/ja/

[8]: https://onlinelibrary.wiley.com/doi/abs/10.1207/s15516709cog0000_33

[9]: https://dl.acm.org/doi/abs/10.1145/3471158.3472257

[10]: https://link.springer.com/article/10.1007/s00405-023-08337-7

[11]: https://proceedings.neurips.cc/paper_files/paper/2024/hash/71c3451f6cd6a4f82bb822db25cea4fd-Abstract-Conference.html

[12]: https://ieeexplore.ieee.org/abstract/document/10705235

Hey! Thank you for reading; hope you enjoyed the article. I run Cognition Today to capture some of the most fascinating mechanisms that guide our lives. My content here is referenced and featured in NY Times, Forbes, CNET, and Entrepreneur, and many other books & research papers.

I’m am a psychology SME consultant in EdTech with a focus on AI cognition and Behavioral Engineering. I’m affiliated to myelin, an EdTech company in India as well.

I’ve studied at NIMHANS Bangalore (positive psychology), Savitribai Phule Pune University (clinical psychology), Fergusson College (BA psych), and affiliated with IIM Ahmedabad (marketing psychology). I’m currently studying Korean at Seoul National University.

I’m based in Pune, India but living in Seoul, S. Korea. Love Sci-fi, horror media; Love rock, metal, synthwave, and K-pop music; can’t whistle; can play 2 guitars at a time.